Your download url is loading / ダウンロード URL を読み込んでいます

Your download url is loading / ダウンロード URL を読み込んでいます

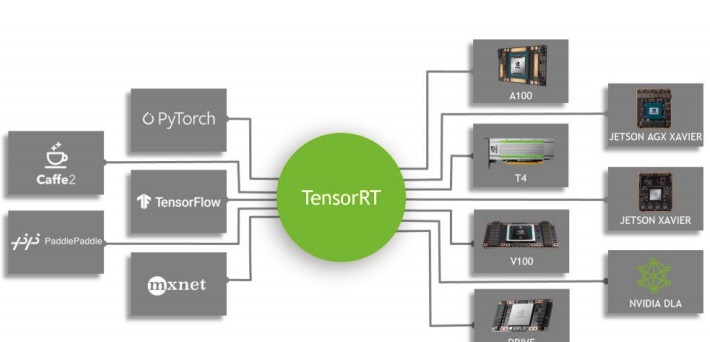

NVIDIA in the present day launched TensorRT™ 8, the eighth era of the corporate’s AI software program, which slashes inference time in half for language queries — enabling builders to construct the world’s best-performing search engines like google, advert suggestions and chatbots and provide them from the cloud to the sting.

TensorRT 8’s optimizations ship record-setting pace for language functions, operating BERT-Giant, one of many world’s most generally used transformer-based fashions, in 1.2 milliseconds. Previously, corporations needed to cut back their mannequin dimension which resulted in considerably much less correct outcomes. Now, with TensorRT 8, corporations can double or triple their mannequin dimension to attain dramatic enhancements in accuracy.

“AI fashions are rising exponentially extra complicated, and worldwide demand is surging for real-time functions that use AI. That makes it crucial for enterprises to deploy state-of-the-art inferencing options,” stated Greg Estes, vice chairman of developer packages at NVIDIA. “The most recent model of TensorRT introduces new capabilities that allow corporations to ship conversational AI functions to their clients with a stage of high quality and responsiveness that was by no means earlier than attainable.”

Whereas a lot hype has been produced concerning the speedy tempo of enterprise cloud deployments, in actuality we estimate lower than 25 % of enterprise workloads are at the moment being run within the cloud. That doesn’t negate the significance of the expansion of cloud computing – however it does set some parameters round simply how prevalent it at the moment is, and the way troublesome it's to maneuver enterprise workloads to a cloud structure.

In 5 years, greater than 350,000 builders throughout 27,500 corporations in wide-ranging areas, together with healthcare, automotive, finance and retail, have downloaded TensorRT almost 2.5 million instances. TensorRT functions may be deployed in hyperscale knowledge facilities, embedded or automotive product platforms.

Newest Inference Improvements

Along with transformer optimizations, TensorRT 8’s breakthroughs in AI inference are made attainable by means of two different key options.

Sparsity is a brand new efficiency method in NVIDIA Ampere structure GPUs to extend effectivity, permitting builders to speed up their neural networks by decreasing computational operations.

Quantization conscious coaching allows builders to make use of skilled fashions to run inference in INT8 precision with out dropping accuracy. This considerably reduces compute and storage overhead for environment friendly inference on Tensor Cores.

Broad Trade Help

Trade leaders have embraced TensorRT for his or her deep studying inference functions in conversational AI and throughout a variety of different fields.

Hugging Face is an open-source AI chief relied on by the world’s largest AI service suppliers throughout a number of industries. The corporate is working carefully with NVIDIA to introduce groundbreaking AI providers that allow textual content evaluation, neural search and conversational functions at scale.

“We’re carefully collaborating with NVIDIA to ship the absolute best efficiency for state-of-the-art fashions on NVIDIA GPUs,” stated Jeff Boudier, product director at Hugging Face. “The Hugging Face Accelerated Inference API already delivers as much as 100x speedup for transformer fashions powered by NVIDIA GPUs. With TensorRT 8, Hugging Face achieved 1ms inference latency on BERT, and we’re excited to supply this efficiency to our clients later this 12 months.”

GE Healthcare, a number one world medical know-how, diagnostics and digital options innovator, is utilizing TensorRT to assist speed up laptop imaginative and prescient functions for ultrasounds, a vital instrument for the early detection of ailments. This allows clinicians to ship the very best high quality of care by means of its clever healthcare options.